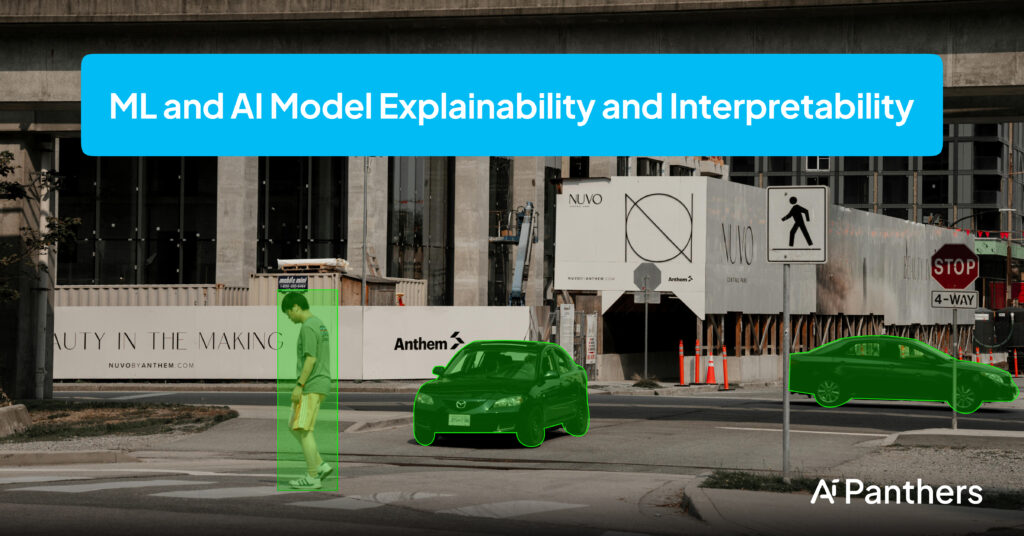

In the rapidly expanding fields of Machine Learning and Machine Intelligence, models are steadily used to make significant judgments in a wide range of areas, including healthcare, finance, and transport. These models are regularly involved and complex with a view to recognising the form, predicting, and automating operations with outstanding accuracy. However, their complexity often obscures the judgment system, leading to a ‘black box’ incident where understanding how a model approaches a particular end becomes problematic.

The lack of visibility in machine intelligence and ML models is causing imperative terrors, especially in high-stakes applications. Without any insight into the logic after the model’s prediction, it is difficult to distinguish the bias of the ability, ensure fairness, and build trust between users and stakeholders. The current status of belief in the explanation and interpretation approach to play.

Explain ability refers to the ability to sense and combine causes in line with the judgments of an AI model so that it is understandable by humans. It means providing clear and understandable explanations if a model produces a particular prediction or takes a specific action

Interpretability, on the other hand, concentrates on the extent to which mortals can grasp the fundamental mechanism and cause-and-effect connections within a model’s confines. It provides a general insight into how the model works, taking into account the values of individual features, the resolution standards it uses, and the general logic of its predictions.

When explaining and interpreting are normally used interchangeably, they have distinct meanings and serve different purposes. Interpretability is a prerequisite for explainability since it provides the basis for understanding and explaining a model’s behavior.

The purpose of this blog is to explore the theories of explainability and interpretability in ML and machine intelligence models, examining their significance, differences, strategies, and objectives. Explainable and explainable AI can unlock the full potential of such technologies by supporting transparency, trust, and liability

The importance of explaining and interpreting has been underlined by the increasing reliance on automated reasoning and ML models in various aspects of life. These characteristics are necessary for several reasons.

Building Trust: explainability and interpretation Foster reliance on machine intelligence structures by allowing users to understand how resolutions are made. Where resolutions may have useful consequences Medical aid and finance, which are particularly essential in this delicate area, are particularly essential. At that point, the user understands the rationale behind the model’s prediction and is more likely to rely on its own advice and take its own advice.

Guaranteeing fairness: AI models may unintentionally perpetuate or exacerbate current biases in the statistics they are trained on. Explaining and Interpreting helps identify such bias, allowing developers to take corrective action and ensure that the model produces fair and equitable decisions. By understanding the power of the model’s prediction, it becomes easy to identify and reduce the capability of the beginning bias.

Enhancing Model Performance : Explainability and Interpretability provide insight into the actions of a model, enabling a developer to identify areas for improvement1. The developers can improve their models, improve their performance, and increase their generalization capacity by understanding what features are essential and how they interact.

Meeting Regulatory Requirements: As machine intelligence becomes more and more dominant, regulatory authorities continue to focus on visibility and accountability. In order to comply with regulations such as the EU Federation’s General Statistics Safety Control ( GDPR ), which requires individuals to have the appropriate knowledge to understand the logic of retrospective automated decisions that affect them.

Enhancing human knowledge: explainable AI helps users understand the underlying shape and connections of data. It can improve homosapiens’ insight into complex phenomena and support better decision-making.

As explanation and interpretation are related concepts, they have distinct meanings and support different purposes. In order to correctly apply the appropriate tactics and obtain the desired transparency tier, it is crucial to recognize this difference.

Feature | Interpretability | Explainability |

Definition | The degree to which a human can understand the cause-and-effect relationships within a model. | The ability to explain the reasons behind an AI model’s decisions in a way that is understandable to humans. |

Scope | Focuses on the internal mechanisms and representations of the model. | Extends beyond the internal workings of the model to provide meaningful and understandable explanations to users. |

Granularity | Provides insights into how the model processes and transforms input data, allowing humans to grasp the model’s decision-making process. | Focuses on communicating the reasons behind specific predictions or decisions made by the model. |

Audience | Primarily targets researchers, data scientists, and experts interested in understanding the model’s behavior and improving its performance. | Has a broader audience, including end-users, domain experts, and regulators who need to understand and trust the AI system’s outputs. |

Techniques | Feature importance analysis, activation visualization, and rule extraction methods. | Generating textual explanations, visualizing decision processes, and using natural language generation. |

Goal | To understand how the model works and how it arrives at its predictions. | To provide understandable reasons and justifications for the model’s outputs. |

Emphasis | Transparency of the model’s internal mechanics. | Clarity and understandability of the model’s outcomes. |

Relationship | Interpretability is a prerequisite for explainability. | Explainability builds upon interpretability to provide human-readable explanations. |

In fact, interpretability concerns the way in which a model is interpreted, while explainability concerns the way in which it is interpreted. A model may exist explainable without the presence of the living explainable, but it cannot nay be used in academic writing exist explainable free of the presence of the living interpretable.

A number of strategies to enhance the explanation and interpretation of ML and AI models4. These strategies may be broadly classified among model-agnostic and model-specific methods.

Model-Agnostic approaches: The above strategies may still be applied to any ML model, regardless of its implicit architecture. They provide insight into the performance of the model without confidence in its internal structure.

Model-Specific Techniques: Such strategies are tailored to a given ML model and use its internal architecture to provide an understanding of its behavior.

Explainable and interpretable AI has numerous applications across various domains, including:

Despite the significant progress in explainable and interpretable AI, several challenges remain:

Future research directions in explainable and interpretable AI include:

Explaining and interpreting models are key to building trust, and fairness, and improving the performance of ML and AI models. By providing information during the determination process, users can understand how the models arrive at their predictions and identify the biases or limitations. They have distinct meanings and support different purposes when explainability and interpretation are related beliefs. Interpretation focuses on the explanation of how the model works, while explanation aims at providing clear explanations of its outputs.

A number of methods, including Aspect Value Review, Incomplete Dependence Chart, LIME, SHAP, and Choice Trees, can still be used to enhance the explanation and interpretation of ML and AI models. These methods have objectives in different areas, including healthcare, banking, movement, and condemnable justice.

Although there is a significant improvement in explainable and explainable AI, many obstacles remain, including the complexity trade-off, scalability, setting dependence, analysis, and causality. Further progress on scalable and effective explanation methods, more context-aware explanations, and standard prosody for evaluation will be part of the future direction of inquiry.

In order to address the problems associated with opaque decision-making processes, we can exploit the full potential of these techniques by adopting explainable and explainable machine intelligence. This will lead to excessively reliable, trustworthy, and beneficial automated reasoning systems that can improve our lives and society as a whole.